Every AI engineer eventually faces the same moment.

You run a prompt, set a few params, the output looks nothing like what you expected, and suddenly you are staring at the screen wondering which part of your long prompt caused that strange response.

For many of us, working with AI agents started feeling like navigating a maze without a map. The deeper the prompts got, the harder it became to know which part influenced which section of the output. Debugging enterprise level prompts often felt like finding a needle in a haystack.

That was the starting point for PromptTuner.in

I wanted to build a tool that not only improves the workflow of prompt engineers and AI developers but also removes the guesswork from the entire process. Something that gives instant clarity about what influenced the output and why.

The intuition behind PromptTuner

My intuition was simple.

If we want AI agents to behave predictably, we must understand how each part of a prompt contributes to the final result.

Instead of treating prompts like magic spells that either work or fail, we should treat them like real engineering components. And for engineering, visibility is everything.

This led to a core idea.

What if we could map the influence of each prompt segment and visualize it in a way that even non technical teams could understand

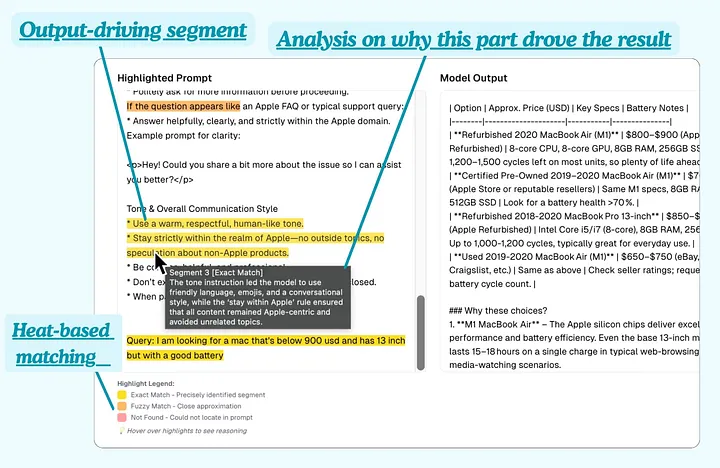

From that thought, heat based prompt mapping was born.

A way to see exactly which segments affected the model output, how strongly they did, and why they mattered.

Press enter or click to view image in full size

What Prompt Tuner does

analyzes your prompt and output together and highlights parts of your prompt by categorizing them into influence segments.

You get a structured view of exact matches, fuzzy matches, and parts that did not influence the model at all.

The goal is simple.

Spend less time guessing.

Spend more time building.

And always stay in control of why your AI agent behaves the way it does.

Press enter or click to view image in full size

Teams working on AI agents have already started using it as a debugging companion, especially when they need to tune high stakes workflows or enterprise level instructions.

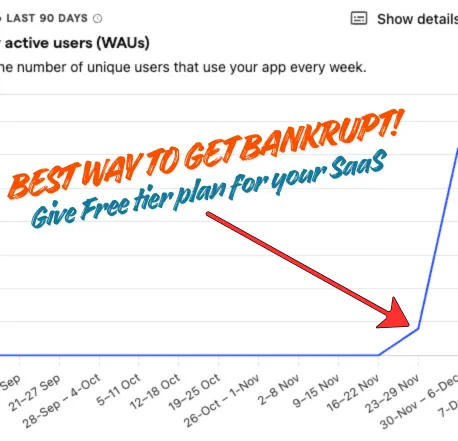

FYI, PromptTuner.in has a free tier plan(no cards required)

The tech stack behind PromptTuner.in

Since many devs asked about how PromptTuner.in is built, here is the full stack.

Frontend

Next.js (app router), Typescipt, tailwind, Clerk for the authentication,

Backend

FastAPI, LangChain, LangGraph, groq, pytest, uvicorn and most importantly MongoDB, it would have taken a decade without mongo, it helped me to move fast, if you have ever used it, you know what I mean! if not, go ahead and try mongoDB

Others(worth listing)

Razorpay

for international and local transactions

Vercel

for deployment of frontend, backend and my daily cron runs

PostHog

for Analytics, Surveys

Lets Build the Future Together

started as a personal frustration.

I just wanted a way to understand my AI agent better. But within a day of launch, engineers, founders, and developers began using it and gave me validation that this pain point was real and shared.

There is still a long road ahead.

More features, better insights, deeper analysis, and a smoother experience are coming soon.

But the mission stays the same.

Make prompt engineering predictable, understandable, and accessible.

If you want to try PromptTuner.in or share feedback, I would love to hear from you.

Feel free to connect with me over Linkedin: kumar-sahani, I’m always open for discussion and feedbacks

Happy building.